Workflows: Empowering Teams in the AI Organization

January 6, 2026

Pramit Rajkrishna

Director Solution Consulting

Pramit is the Director of Digital Strategy for Sitation, specializing in product data management and governance, PIM implementations and site, organic and paid search and currently oversees consulting and PIM implementation engagements with Sitation’s enterprise and mid-market customers. Prior to joining Sitation in 2019, Pramit was the Data Strategy and Operations Manager for the Digital division of Arrow Electronics (Fortune 102), based in Denver, CO from 2015 to 2019, driving the reimagining of a new digital experience for the company’s core electronics components distribution business.

Pramit has a Masters in Electrical Engineering from Colorado State University and is certified in Riversand and Salsify PIM platforms, in addition to being certified in Lean-Agile and a Certified Scrum Product Owner (CSPO) by Scrum Alliance.

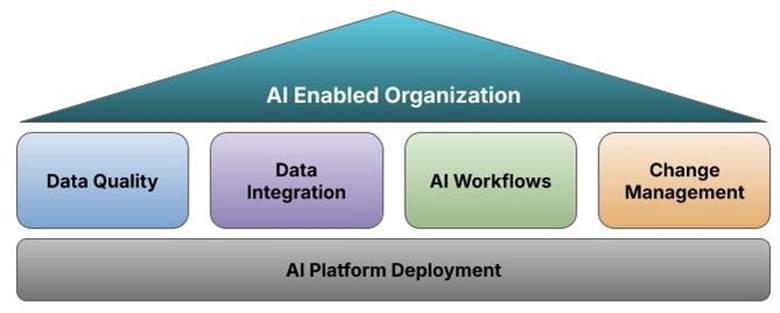

This blog is the fourth of a series of blogs covering the fundamentals of organization wide AI platform deployment and adoption

In parts one, two, and three, we covered the three core aspects of AI adoption in the organization for delivering value:

- Data Quality

- AI Algorithms

- Data Integration

In this blog, we will cover the fourth aspect and crucial role of effective AI workflows for prerequisite in the AI enabled organization.se.

The key foundational elements of AI workflows for effective AI implementations are four-fold:

- Use Cases

- Workflow Definition

- Execution Time (Efficiency)

- Resource Provisioning and Security

Use Cases

The core rationale for deployment of AI at an enterprise level is to accelerate business outcomes and gain value from AI adoption in the enterprise. Business outcomes can range from direct KPIs such as revenue and customer growth, to indirect improvements in organization efficiency such as automated finance/HR capabilities.The use case definition phase for AI workflows is arguably the most important component to not just rationalize the set up of AI models, but also justify the significant capital investment, time and effort deploying AI models as part of the enterprise stack.

At this stage, providing expected build/execution timelines and clear expected outcomes also helps build realistic expectations around models and projected outcomes and any deviations can be factored in prior to effort investment in building the models and structures around them. Also, buy-in for time and resource commitment from IT and business leadership is required to ensure that the use case definition phase takes into account all necessary stakeholders for realistic outcomes and successful deployment.

Workflow Definition

Once the use cases have been set up, the next task is to build out the workflow stages as a combination of the data pipelines, formats, systems and teams to the specific business outcomes. For example, in the manufacturing and distribution space, building specific AI workflows for inventory forecasting will potentially involve the following steps:

- Aggregate inventory and order data from ERP systems,

- Transform them in the data integration layer into the correct data points for analysis

- Setting up a staging area for the incoming data files into a model readable format and sizing

- Delivering data into the organization specific model and tracking processing time

- Receipt of processed information into staging location and subsequent delivery to downstream analytics systems for review and feedback by business users

Similar steps can be defined for high value use cases in the retail, energy, healthcare spaces among others. The key point to note here is the ability to clearly define the ownership and expectation of the workflow stages and a clear feedback mechanism to calibrate the model, to be able to deliver expected outcomes from an AI deployment.

Execution Time: Efficiency

Once AI workflows are defined and are being built, it is extremely important to ensure that any expected outcomes are delivered in a timely manner to drive business value and decision making. Workflow execution time can be established as a function of total execution stages, algorithm run time, data volume and average delay per stage pre/post AI model.

- Total Execution Stages – More complex workflows with have a higher execution time as a direct correlation to the increased number of stages, in addition to the higher probability of potential failures at each stage

- Algorithm Run Time – The selection of the correct AI model is a key factor in workflow timing and needs to be selected based on benchmarking data to ensure that the appropriate model is used for the use case

- Data Volume – Data volume is directly correlated to the execution time of the workflow as stages will take longer to execute and the model will take longer to run

- Average delay per stage – Average delay per stage is set as a benchmark for workflow runtime after the model deployment stabilizes and the objective should be to optimize this downwards for efficiency gains.

Resource Provisioning and Security

Finally, as AI workflows are deployed, the infrastructure teams need to closely monitor model performance and provisioning to ensure that workflow execution time is not being impacted by any stage failures or abnormal data volumes. In addition, robust authentication techniques should be leveraged to ensure that the intended recipients have the privilege to view the data, given the sensitive nature of workflow output information.

Standard monitoring tools available on AI models as an addition to enterprise infrastructure management and monitoring tools with robust authentication mechanisms should round out the deployment and ongoing maintenance of the AI workflows. In summary, AI workflows require considerable forethought and planning prior to build out and deployment and necessitates a measured approach into workflow planning, development and management. In the next and final blog of the AI series, we will cover the change management aspect of AI deployments for the AI enabled enterprise

Contact us for a more in-depth discussion on workflows and AI platform deployment and adoption.