The goal of a PIM is to easily enrich and store product data for downstream destinations. How the data should flow to downstream destinations is a critical piece of any implementation. For Akeneo Serenity customers, the choice is an easy one: API. Extending the PIM isn’t supported for Serenity, so the only path is the API endpoints for assets. That’s the core trade-off of Serenity vs Flexibility: The former provides monthly feature releases with support options and the latter provides the option to extend any of the codebase.

When handling very large collections of assets (100+ GB) it can be difficult to create a highly efficient sync process for downstream software. Using a CDN for asset storage allows a file to be written once in Akeneo, and then be available by accessing the CDN directly, rather than needing to store the files in 2 places (Akeneo and downstream).

Luckily, Akeneo Flexibility supports external file storage for assets, even with a walkthrough in their docs for AWS S3 Support.

Akeneo is built on the Symfony platform and includes the flysystem bundle to support external file storage for the application. This allows relatively straightforward configuration of the appropriate flysystem bundle to support your cloud provider of choice. AWS is supported out of the box, but Azure Blob Storage is fully functional with a few minor changes:

1. Modify your composer.json with:

"microsoft/azure-storage-blob": "^1.5",Here, you’ll see the Microsoft Blob Storage for Azure support, and the League Azure bundle as well: https://flysystem.thephpleague.com/v1/docs/adapter/azure/.

"league/flysystem-azure-blob-storage": "^1.0",

"oneup/flysystem-bundle": "3.5.0 as 3.1.0",

Here is the major tricky bit as part of the installation process: 4.0 Akeneo Enterprise relies on the 3.1.0 version of the flysystem-bundle, where flysystem-azure-blob-storage requires a later version. Composer allows us to run a later version in this case while still acting like it’s an earlier version for Akeneo. (5.0 doesn’t share this issue)

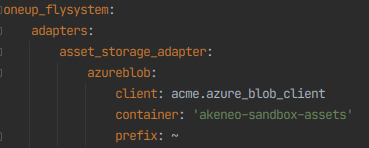

2. Modify your `config/packages/prod/oneup_flysystem.yml` to include the following modify whichever adapter (in our case, assets) to use the azure config:

New:

Note: Akeneo 5.0 has a different naming convention here, but the structure of the yml file is the same.

3. Then, enable the following bundle in your bundles.php class:

Oneup\FlysystemBundle\OneupFlysystemBundle::class => [‘dev’ => true, ‘prod’ => true]

4. Add the service: config/services/services.yml

services:

acme.azure_blob_client:

class: MicrosoftAzure\Storage\Blob\BlobRestProxy

factory: "MicrosoftAzure\\Storage\\Blob\\BlobRestProxy::createBlobService"

arguments:

- '%env(AZURE_BLOB_STORAGE_CONNECTION_STRING)%'

5. At this point, the Akeneo side should work, but you’ll have to configure the env to have the referenced variable from above:AZURE_BLOB_STORAGE_CONNECTION_STRING

6. Now before you can refresh the cache and see the data, you’ll have to create your connection string in Azure and ensure the permissions are correct.

With any remote storage option, performance concerns should be discussed. On a traditional single server installation of Akeneo, an asset uploaded to the server is saved to the file system and the reference to it in the database. For this Azure Blob Storage (or any other cloud platform provider), the reference to the file in the database is identical. But the file system save is actually an api call to send the file to a remote server via HTTP. For small numbers of assets, the performance hit here is minimal. For larger systems, it’s more complicated.

In precise terms, it is 1 Gbit/second transfer speed instead of 6 – 64 Gbit/second for an SSD or m2 backed file system (see this article for details). This may seem minimal, but if you will need to generate transformations of over 800,000 assets in the future the speed can cause some major delays in processing.

Though there may be other requirements for your asset storage, if you are looking to quickly transfer large amounts of data then you should consider the approach described in this article.

Reach out to us at Sitation if you have specific questions or would like to discuss some of the implications in this approach for your business.